Azure Data Factory (ADF) continues to lead as a versatile and scalable data integration service in the cloud. Its ability to parameterize various components has dramatically enhanced flexibility and efficiency when building data workflows. In this blog, we will focus on how to leverage parameterization in ADF with a specific use case for loading customer data dynamically into SQL tables. We will also cover recent updates from 2024 that further improve this functionality.

What is Parameterization in Azure Data Factory?

Parameterization in Azure Data Factory allows you to pass values dynamically into pipelines and datasets, reducing the need for hardcoding and making the solution scalable and reusable. This becomes particularly useful when managing datasets with similar structures, but for different data sources, such as multiple customer data files.

Types of Parameterization:

Pipeline Parameters: These are global to the entire pipeline and can be used to pass values like file paths, table names, or connection strings dynamically when the pipeline is executed.

Dataset Parameters: These allow datasets to be more flexible by letting you define values for specific properties like file names or database tables at runtime.

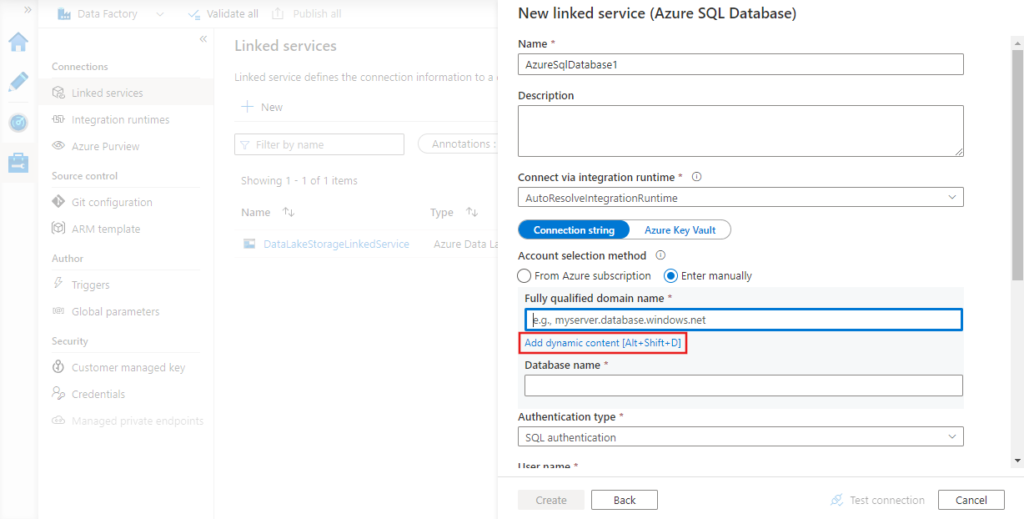

Linked Service Parameters: You can configure linked services to dynamically pass credentials, database names, or endpoints at runtime. This prevents the need for multiple linked services for different environments(MS Learn)(MS Learn).

Latest Updates in Azure Data Factory (2024)

Several key updates in 2024 have made Azure Data Factory’s parameterization more powerful:

Global Parameters: ADF introduced global parameters that allow you to define constants and reuse them across different pipelines. This simplifies managing large environments(MS Learn).

Expanded Data Connectors: ADF now supports more data sources, including connectors for PostgreSQL, Snowflake, Salesforce, and more, enhancing its capability to move data securely between services(MS Learn)(Azure).

Enhanced Linked Service Parameterization: Now you can dynamically pass parameters for various services like SQL Server, SAP HANA, and Amazon Redshift, minimizing the need for hardcoded values(MS Learn).

Case Study: Importing Customer Data into SQL Using Parameterization

Let’s consider a scenario where you need to load customer data from multiple CSV files into an SQL database. Without parameterization, you’d have to create separate datasets and pipelines for each file and table. Parameterization makes it much easier to handle dynamic file paths and table names. Here’s how to set it up:

Step 1: Define Parameters in the Dataset

Start by creating parameters for FileName and TableName in your dataset:

json{

"filePath": "@{pipeline().parameters.FileName}"

}

This allows the file path to be set dynamically during pipeline execution.

Step 2: Configure the Pipeline

Next, in the pipeline configuration, set up parameters for FileName and TableName. For example, if you’re importing customer data, you would pass the following values:

json{

"fileName": "customers.csv",

"tableName": "CustomersTable"

}

Step 3: Use the Parameters in Activities

In the pipeline, use the parameters to define both the source and the sink (destination) dynamically. For example, the source could point to the customers.csv file in Azure Blob Storage, and the sink would point to the CustomersTable in SQL.

Step 4: Execution

When you run the pipeline, Azure Data Factory will use the parameters provided at runtime to load the customer data dynamically into the specified SQL table.

Advanced Example: Adding Dynamic Date to File Names

You can extend this further by using expressions in ADF to add dynamic content, such as appending dates to file names. For instance, you can use the concat function to add the current date to the file name:

json{

"fileName": "@concat('customers-', utcNow(), '.csv')"

}

This will ensure that each file gets a unique name with the current date attached, making it easier to manage and track files over time. (MS Learn).

Best Practices for Parameterizing Workflows

Use Global Parameters: Define global parameters for values that remain constant across pipelines, such as folder paths or connection strings. This will reduce redundancy and make your pipelines easier to maintain(MS Learn).

Secure Credentials with Azure Key Vault: Avoid parameterizing sensitive information like passwords. Instead, use Azure Key Vault to store and retrieve sensitive information securely during pipeline execution(MS Learn).

Leverage Linked Service Parameterization: Use linked service parameters to dynamically switch between databases, storage accounts, or APIs at runtime. This reduces the need for creating multiple linked services for different environments(MS Learn).

Conclusion

Azure Data Factory’s parameterization capabilities allow you to create flexible, scalable, and efficient data pipelines. The ability to pass dynamic values for file names, table names, and connection properties at runtime simplifies the management of complex workflows. The latest updates in 2024, such as global parameters and expanded connector support, further enhance ADF’s flexibility, making it an essential tool for enterprise data integration.

By using these techniques, you can streamline processes such as importing customer data from CSV files into SQL tables dynamically, minimizing manual work and reducing redundancy in your data workflows.

For more in-depth tutorials and detailed documentation, visit the Azure Data Factory documentation(MS Learn)(MS Learn)(Azure).