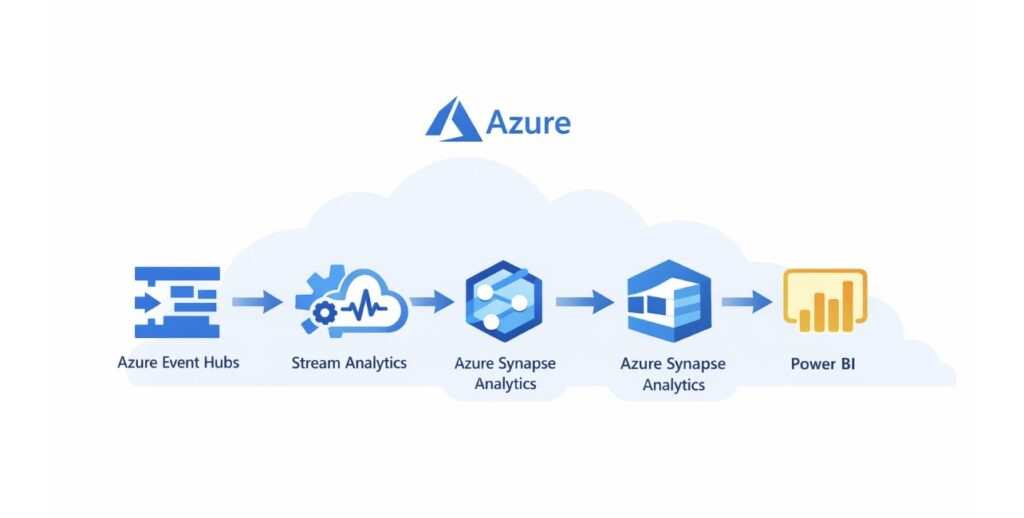

Overview. This pipeline ingests and processes real‑time IoT telemetry using Azure services. Devices send sensor data to Event Hubs, which buffers the incoming stream. Azure Databricks (or Azure Stream Analytics) processes and enriches the data before storing results in Cosmos DB for operational queries. Analytical reporting is delivered through Microsoft Fabric or Power BI.

Why this architecture? Microsoft’s reference architecture highlights a four‑step flow: ingest, process, store and analyze. Event Hubs reliably ingests data at scale; Databricks correlates and enriches streams; Cosmos DB stores processed events; and Fabric/Power BI enables dashboards without additional ETL. Decoupling storage from compute allows independent scaling and cost optimisation.

Best practices. Azure analytics guidelines recommend orchestrating ingestion with a pipeline tool like Data Factory, scrubbing sensitive data early and creating a single source of truth in a data lake. Applying these practices ensures data quality and governance in IoT scenarios. Combining streaming and batch processing in Databricks simplifies machine learning use cases, such as anomaly detection on sensor readings.