Azure Data Factory (ADF) is a robust and scalable cloud-based data integration service that allows organizations to create and manage complex data pipelines. At the heart of ADF are activities, which define the operations to be performed on your data. These activities can move, transform, and analyze data across various sources and destinations, enabling efficient workflows in cloud environments.

In this blog, we will provide an in-depth guide to the different types of activities available in ADF, categorized for easy understanding. This knowledge is essential for building robust data pipelines that can automate your data movement and transformation processes.

1. Search Activities

The Search Activities feature in ADF provides a quick and easy way to search for activities across different categories. This is particularly useful when you’re building a complex pipeline and need to locate an activity from the vast library available.

Key Features:

Allows you to find specific activities without navigating through categories.

Reduces development time by providing quick access to desired activities.

2. Move and Transform Activities

Move and transform activities are used to copy data between sources and perform data transformations without writing code. These are among the most commonly used activities in ADF pipelines.

Common Activities:

Copy Data: This is the most fundamental activity, used for copying data between different data stores. It supports a wide range of sources and destinations.

Data Flow: This is a visual activity that allows you to design complex transformations using a no-code interface. You can filter, aggregate, and join data as needed.

SQL Server Stored Procedure: Executes stored procedures on SQL Server databases, allowing you to perform custom data manipulations directly in the source system.

Execute Pipeline: Useful when building modular pipelines, this activity allows you to call another pipeline from within the current pipeline.

Filter: This activity filters a collection of records or data items based on specified conditions.

ForEach: A loop activity that iterates over a collection of items, executing one or more activities for each item.

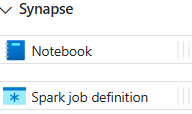

3. Synapse Activities

Azure Synapse Analytics is deeply integrated with ADF, offering a set of activities designed to work within the Synapse ecosystem.

Key Activities:

Azure Synapse Analytics: Allows you to run SQL queries or transformation tasks within Synapse Analytics.

Synapse Notebook: You can execute notebooks directly from ADF, leveraging the power of Synapse Spark.

Synapse Spark: This activity interacts with Synapse Spark pools for distributed data processing, providing a scalable way to handle large datasets.

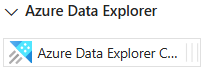

4. Azure Data Explorer Activities

Azure Data Explorer is optimized for interactive, high-performance analysis of big data. ADF supports this with a range of activities designed for querying and managing data within Azure Data Explorer.

Key Activity:

Azure Data Explorer Command: Executes Kusto queries on Azure Data Explorer, enabling complex querying and manipulation of large-scale time-series data.

5. Azure Function Activity

Sometimes, you need to run custom code as part of your pipeline. The Azure Function activity provides the flexibility to call serverless functions that can perform complex computations, invoke APIs, or trigger workflows.

Key Features:

Allows running custom scripts or operations that aren’t covered by default activities.

Integrates with any Azure Function you have deployed in your subscription.

6. Batch Service Activities

Azure Batch is a compute management service that allows you to execute parallel jobs across many virtual machines. In ADF, you can trigger batch jobs to perform large-scale compute tasks.

Key Activity:

Azure Batch: This activity invokes jobs on the Azure Batch Service, allowing you to handle high-performance parallel workloads within your pipeline.

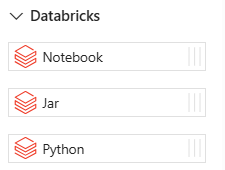

7. Databricks Activities

Azure Databricks is an Apache Spark-based analytics platform optimized for Azure. ADF provides several activities to integrate seamlessly with Databricks for data engineering, data science, and machine learning workflows.

Key Activities:

Databricks Notebook: Executes a notebook within your Databricks workspace, enabling a scripted approach to data processing.

Databricks Python: Runs a Python script on a Databricks cluster, ideal for advanced data transformation and machine learning.

Databricks Jar: Executes Java-based .jar files, providing flexibility for users who need to run pre-built Java code for data processing.

8. Data Lake Analytics Activities

Azure Data Lake Analytics is a distributed analytics service that allows you to run large-scale data processing scripts. ADF includes activities specifically designed for managing these workflows.

Key Activity:

Azure Data Lake Analytics U-SQL: This activity runs U-SQL scripts in Data Lake Analytics, allowing you to perform complex transformations on massive datasets stored in Azure Data Lake.

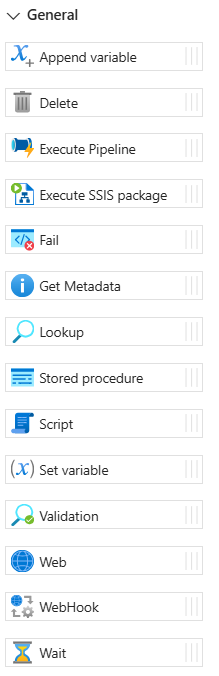

9. General Activities

The General category contains versatile activities that apply to a wide range of data pipelines.

Common Activities:

Execute Pipeline: Runs another pipeline as part of your workflow, facilitating modular and reusable pipeline design.

Wait: Introduces a delay into your pipeline. This is useful when you need to pause execution for a specific period before moving on to the next activity.

Get Metadata: Retrieves metadata from a data source, such as schema information or file properties, for use in subsequent activities.

Set Variable: This activity sets a value to a pipeline variable, which can then be used across activities.

If Condition: A branching activity that runs different sets of activities based on a condition (true or false).

Until: A looping activity that runs a set of activities until a specified condition is met.

Append Variable: Adds an item to an existing variable, useful for accumulating data during pipeline execution.

10. HDInsight Activities

Azure HDInsight is a fully-managed cloud service that makes it easier to process big data using popular open-source frameworks such as Hadoop, Spark, Hive, and more.

Key Activities:

HDInsight Hive: Executes Hive scripts to analyze large datasets stored in Hadoop or Azure Blob Storage.

HDInsight Pig: Runs Pig scripts for transforming and analyzing large-scale datasets.

HDInsight MapReduce: Allows running MapReduce jobs on HDInsight for processing large datasets.

HDInsight Spark: Executes Spark jobs on HDInsight clusters, offering distributed computing power for large-scale data processing.

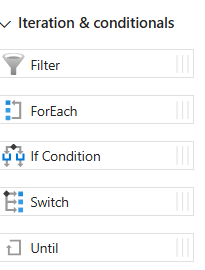

11. Iteration & Conditionals

ADF supports looping and conditional logic, allowing pipelines to handle complex workflows that depend on data conditions.

Key Activities:

ForEach: Executes a set of activities for each item in a collection.

Filter: Filters data from a collection based on a specified condition.

If Condition: Allows you to define logic to branch the execution based on whether the condition evaluates to true or false.

Switch: Routes execution based on the value of an expression, similar to a switch statement in programming languages.

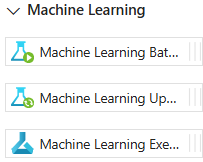

12. Machine Learning Activities

ADF integrates with machine learning services, allowing you to incorporate predictive analytics and model scoring directly into your data pipelines.

Key Activities:

Execute Pipeline: Invokes an Azure Machine Learning pipeline, enabling end-to-end integration with machine learning workflows.

Batch Scoring: Performs batch scoring on data using a machine learning model.

Web Service Call: Invokes an external web service that performs machine learning-related tasks.

13. Power Query Activity

Power Query is a data transformation engine designed for self-service data preparation. ADF includes Power Query as an activity, making it easy to apply transformations from Power Query’s rich set of tools directly in your pipelines.

Key Activity:

Power Query: Allows you to transform and prepare data using Power Query logic, leveraging its no-code/low-code interface.

Conclusion

Azure Data Factory offers a wide array of activities that enable businesses to build powerful, scalable, and efficient data pipelines. Whether you’re moving data between stores, transforming it for analysis, or invoking custom machine learning models, ADF has the tools to handle it all. By understanding the various categories and activities available, you can better design pipelines that are tailored to your organisation’s specific needs.