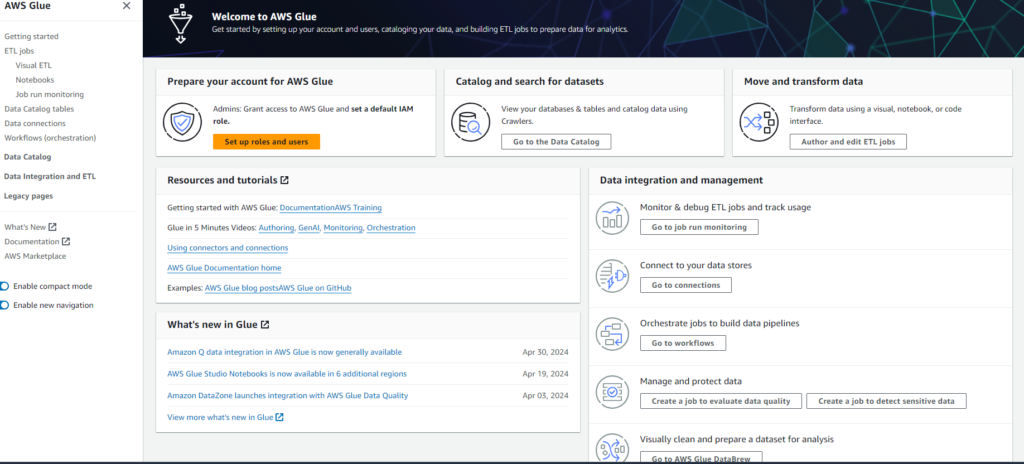

In the age of big data, integrating, transforming, and preparing data for analytics can be a complex task. AWS Glue, Amazon’s fully managed Extract, Transform, Load (ETL) service, is designed to simplify these challenges. Whether you’re working with massive datasets or real-time analytics pipelines, AWS Glue offers a variety of tools to automate the process. In this blog, we will take a deep dive into AWS Glue, explaining its features, benefits, and how to get started

What is AWS Glue?

AWS Glue is a cloud-based ETL (Extract, Transform, Load) service that automates the process of discovering, cataloging, and transforming data from various sources into a format suitable for analytics. Launched by Amazon Web Services (AWS), Glue integrates seamlessly with other AWS services such as Amazon S3, Amazon RDS, and Amazon Redshift, making it an essential tool for organizations leveraging cloud infrastructure.

The primary goal of AWS Glue is to eliminate the undifferentiated heavy lifting involved in building and managing data pipelines. It offers:

Serverless Architecture: No infrastructure to manage.

Integrated Data Catalog: Automatically discovers and catalogs data.

Supports Popular Data Sources: Works with a variety of data formats and databases.

Cost-Effective: Pay only for the resources you consume.

Key Features of AWS Glue

Data Catalog: AWS Glue’s metadata repository automatically catalogs data, making it discoverable for analysis or transformation.

ETL Jobs: Create and manage ETL workflows either via code, a visual interface, or notebooks.

Workflows: Glue enables you to orchestrate complex multi-step ETL workflows, automating your data pipeline.

Notebook Integration: AWS Glue supports Jupyter Notebooks, allowing for interactive ETL development.

Visual ETL (Glue Studio): Build ETL pipelines using a visual interface, ideal for data engineers and analysts who want low-code/no-code options.

DataBrew: A tool for visually cleaning and transforming raw data without needing deep programming knowledge.

Getting Started with AWS Glue

Setting Up Your Account

Before diving into AWS Glue, you must prepare your AWS account:

IAM Role Configuration: Glue requires permissions to access data stores and services in AWS. Set up an Identity and Access Management (IAM) role with policies that allow Glue to read and write from Amazon S3, RDS, Redshift, etc.

Glue Service Access: Assign roles to users in your organization that determine who can create and manage Glue jobs.

To get started, create a Glue-specific IAM role by following AWS Glue documentation on Creating an IAM Role for AWS Glue.

Data Cataloging with Crawlers

Data cataloging is one of AWS Glue’s core functionalities. AWS Glue Crawlers automatically scan your data stores, infer schemas, and populate the Glue Data Catalog with metadata that describes your data. Crawlers can be set up to run at scheduled intervals to keep your metadata up-to-date.

Steps to Create a Crawler:

Go to the AWS Glue Console and navigate to the Crawlers section.

Click Add Crawler, and configure the data store (e.g., S3, RDS).

Set a schedule to run the crawler (e.g., daily, weekly).

Specify the IAM role that allows the crawler to access the data.

After the crawl, the data is available in the AWS Glue Data Catalog and can be used in your ETL jobs or queried directly using services like Amazon Athena.

Authoring ETL Jobs

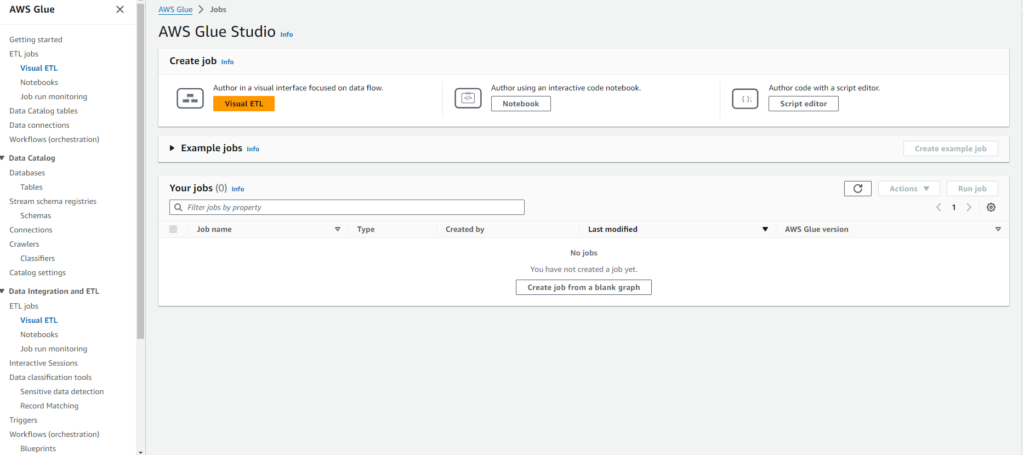

AWS Glue offers three ways to author ETL jobs:

AWS Glue Studio: A low-code, visual interface.

Jupyter Notebooks: For those who prefer an interactive Python-based environment.

AWS Glue SDK and API: For developers who prefer to write custom ETL scripts.

An ETL job consists of:

Extract: Pulling data from sources such as Amazon S3 or databases.

Transform: Cleaning and enriching the data, typically done using PySpark within AWS Glue.

Load: Writing the processed data back into a target destination such as Amazon S3, Redshift, or a relational database.

Job Monitoring and Debugging

AWS Glue provides comprehensive job monitoring tools. These tools allow you to view metrics like execution time, memory usage, and data throughput for each job. If an ETL job fails, AWS Glue provides logs through Amazon CloudWatch to help you troubleshoot the issue.

Job Metrics: Monitor CPU, memory, and data I/O for your ETL jobs.

Failure Handling: AWS Glue integrates with AWS Lambda for real-time failure handling, enabling you to automate retries or notifications.

AWS Glue Studio: Visual ETL

AWS Glue Studio provides a drag-and-drop interface to create ETL workflows without writing code. This feature is particularly useful for users who prefer visual tools over coding.

Key Features of AWS Glue Studio:

- Drag-and-Drop Interface: Build ETL pipelines by connecting components visually.

- Data Preview: View intermediate data at various stages of the pipeline.

- Job Scheduler: Schedule your ETL jobs directly from the visual interface.

AWS Glue Studio is an ideal choice for business users or less technical data engineers, enabling them to build complex workflows without needing to write Python or Spark code.

Orchestration with AWS Glue Workflows

For more advanced use cases, AWS Glue provides workflows that allow you to orchestrate multiple ETL jobs and other related tasks. Workflows can manage complex pipelines with dependencies between jobs.

- Workflows: Create a pipeline where jobs can run in sequence or in parallel, handling retries and errors automatically.

- Triggers: Define event-based or time-based triggers for your workflows.

Use AWS Glue Workflows to manage the full lifecycle of your data from raw ingestion to transformed and cleaned data ready for analysis.

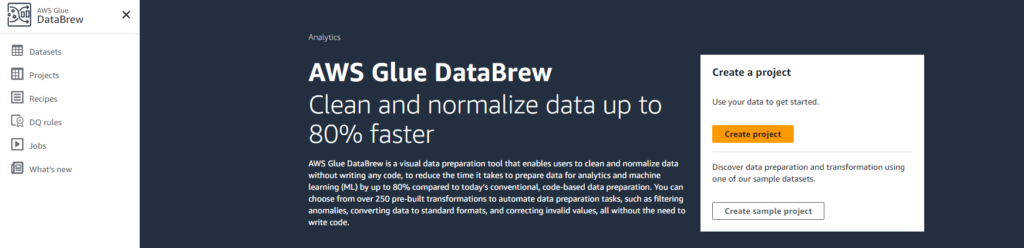

AWS Glue DataBrew: A Visual Data Preparation Tool

AWS Glue DataBrew is a separate offering within Glue that provides a point-and-click interface to clean and normalize data. DataBrew is ideal for business users or data analysts who need to prepare data for analytics but may not have deep coding skills.

- Data Cleaning: Remove duplicates, correct data types, handle missing values.

- Normalization: Normalize columns, standardize data, and create consistent formats.

- Data Enrichment: Enrich datasets with additional columns or reference data.

DataBrew recipes can be saved and reused across multiple datasets, enabling consistent data preparation workflows across the organization.

Cost Management and Pricing

AWS Glue uses a pay-as-you-go pricing model, where you are charged based on:

- Data Catalog Storage: Charged for the amount of metadata stored.

- ETL Job Duration: Based on the number of Data Processing Units (DPUs) consumed by the job.

- DataBrew Jobs: Charged by the number of datasets and steps applied.

You can reduce costs by optimizing job execution times, choosing the right data storage options, and using AWS Glue’s built-in cost management features to track usage.

For more information, visit AWS Glue’s Pricing Page.

Conclusion

AWS Glue is a powerful, serverless solution for anyone looking to simplify data integration, ETL processes, and cataloging within the AWS ecosystem. Its rich feature set, including support for visual ETL, Jupyter notebooks, and workflows, makes it an ideal tool for organizations of all sizes.

Whether you’re a data engineer creating complex data pipelines or a business analyst needing to quickly clean data, AWS Glue has the tools to meet your needs.