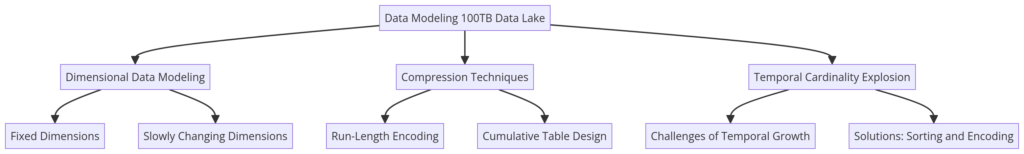

Managing massive datasets in data lakes is a common challenge for data engineers. As data volumes grow, so does the complexity of efficiently modeling, transforming, and compressing this data for analysis and decision-making. In this article, we explore advanced data modeling techniques, focusing on dimensional data modeling, compression with run-length encoding, and managing temporal cardinality explosions, drawing insights from practical applications at companies like Airbnb, Netflix, and Facebook.

Dimensional Data Modeling

Dimensional data modeling is a method used to design data structures optimized for query performance and analytics. This approach revolves around dimensions (attributes like time, location, or category) and facts (measurable events or data points). Understanding and implementing dimensional modeling correctly is critical for ensuring that data is both accessible and valuable.

Key Concepts:

- Fixed Dimensions: These are attributes that do not change over time, such as a user’s birthdate or signup date. They are simple to model because they remain constant.

- Slowly Changing Dimensions (SCDs): These are dimensions that can change over time, such as a user’s favorite food or address. Modeling SCDs requires strategies to track changes, like maintaining historical data or updating values incrementally.

Cumulative Table Design

Cumulative table design helps in managing large datasets by storing changes over time compactly. Instead of keeping separate daily snapshots, which are bulky and inefficient, cumulative tables aggregate historical data into a single, evolving dataset. This design drastically reduces storage needs and improves performance.

Benefits:

- Compact Storage: Storing data in cumulative tables cuts down the data volume significantly, sometimes by over 90%. This is achieved by maintaining an array of changes rather than separate records for each day.

- Efficient Querying: With cumulative tables, you can query historical data without needing to scan multiple partitions, reducing the data scanned and speeding up query response times.

Temporal Cardinality Explosion

Adding a temporal aspect to dimensions, such as modeling data daily or hourly, can lead to what’s known as a temporal cardinality explosion. This occurs when the number of data rows multiplies dramatically due to the added time dimension. For instance, modeling daily availability for Airbnb’s 10 million listings over a year leads to billions of rows.

Mitigation Strategies:

- Sorting and Encoding: Properly sorting data before encoding helps maintain compactness. Encoding techniques like run-length encoding (RLE) can significantly reduce data size by storing repeated values efficiently.

- Using STRUCT and Array: Arrays and structures (STRUCTs) allow related data points to be stored together, which helps in keeping data compact and easily accessible. This is particularly effective in environments where dimensions have a temporal aspect.

Compression Techniques: Run-Length Encoding

Compression plays a vital role in data management, especially in large-scale data lakes. Run-length encoding is a popular method that reduces data size by eliminating redundancy, making it an essential tool for data engineers.

How RLE Works:

- RLE stores repeated values as a single value with a count, instead of storing each occurrence separately. For example, a dataset listing a person’s daily activity status would record repeated values (e.g., “active” for 7 days) as “active (x7)” rather than seven separate entries.

- In combination with Parquet, a columnar storage format, RLE can reduce file sizes dramatically, sometimes shrinking data volumes by up to 80%.

Conclusion

Effective data modeling and compression are critical in managing modern data lakes. By using advanced techniques like cumulative table design, dimensional modeling, and run-length encoding, data engineers can turn unwieldy datasets into compact, manageable, and highly performant data assets. These strategies not only save storage costs but also improve the speed and reliability of data queries, making them indispensable in any large-scale data operation.