In today’s dynamic world, having a unified system that can analyze both real-time and historical data is key to staying competitive. Event analytics is a powerful tool that helps businesses gain insights from data streams and take action in real time, while also deriving insights from accumulated data. In this blog post, we will explore the concept of event analytics, its required workloads, item types, and a use case scenario that demonstrates how it can empower data-driven decisions.

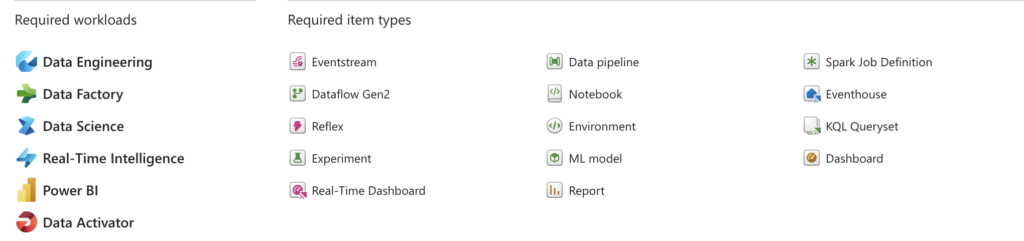

Required Workloads and Item Types for Event Analytics

Implementing event analytics requires a specific set of workloads and item types to enable seamless integration of real-time and batch processing. Here are the components needed:

1. Required Workloads:

Data Engineering: Responsible for data ingestion, transformation, and ensuring that data is in the correct format for analysis.

Data Factory: Enables orchestration of data pipelines and seamless movement of data between sources and destinations.

Data Science: Helps to build predictive models using both historical and real-time data to generate valuable insights.

Real-Time Intelligence: Manages and processes streaming data, allowing for real-time insights and actions.

Power BI: Provides visualization and reporting to make data understandable and actionable for stakeholders.

Data Activator: Facilitates real-time decision-making based on the latest available data.

2. Required Item Types:

Eventstream: Captures data streams in real time from various sources, enabling the analysis of up-to-date information.

Dataflow Gen2: Manages data transformations, ensuring that data is prepared for further analysis.

Reflex: Enables real-time data adjustments and data-driven actions based on events.

Experiment: Supports experimentation to validate data logic and predictive models.

Real-Time Dashboard: Displays live data visualizations that help stakeholders monitor key metrics and make informed decisions.

Data Pipeline: Manages the ingestion and movement of batch data to different processing stages.

Notebook: An interactive environment used for data exploration, analysis, and documentation.

Environment: Provides the infrastructure required for data processing and analysis.

ML Model: Creates machine learning models that can be deployed for both batch and real-time predictions.

Spark Job Definition: Defines and executes batch processing jobs, ensuring efficient handling of large data sets.

Eventhouse: Acts as a repository for raw event data before being processed for analytics.

KQL Queryset: Used for querying data in real time, allowing for insights into event data.

Dashboard: Displays consolidated metrics, combining insights from both batch and real-time data.

Report: Provides summarized information for stakeholders, offering a clear overview of key insights.

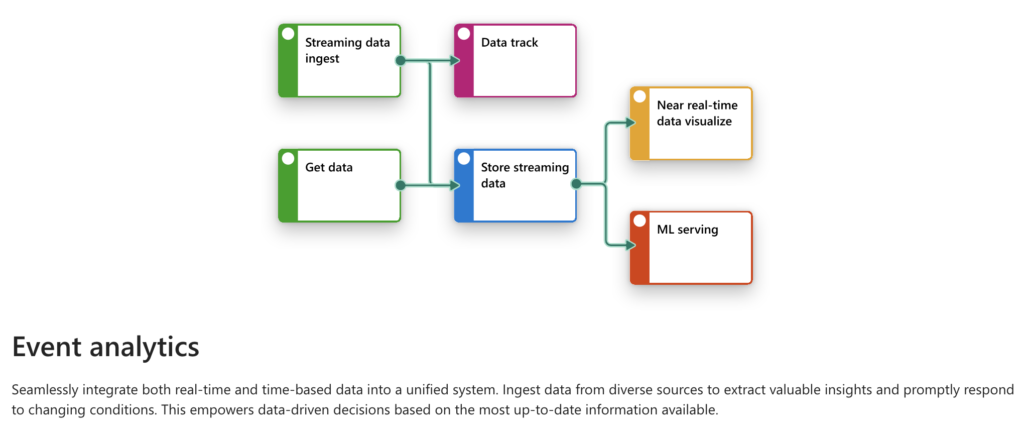

Event Analytics Design Flow

Event analytics integrates both real-time and batch data, creating a unified system capable of delivering up-to-date insights and informed decisions. Below is a high-level overview of the event analytics design flow:

Streaming Data Ingest & Get Data:

Streaming Data Ingest: Real-time data streams from various sources, such as IoT sensors, web applications, and social media feeds.

Get Data: Data retrieval at regular intervals, including historical data from legacy systems or external databases.

Data Track & Store Streaming Data:

Data Track: Tracks incoming events to ensure consistency, enabling quick identification of anomalies.

Store Streaming Data: Stores raw data from real-time streams, creating a repository that can be queried and analyzed.

Near Real-Time Data Visualization & ML Serving:

Near Real-Time Data Visualization: Data is visualized in near real-time, providing users with immediate insights through dashboards and interactive visual tools.

ML Serving: Machine learning models are used to provide predictive insights and decision support based on streaming data, allowing organizations to react quickly to changing circumstances.

Use Case: Real-Time Customer Sentiment Analysis

Consider a retail company that wants to understand customer sentiment in real time across multiple social media platforms, while also analyzing historical trends. Here’s how event analytics can help:

Streaming Data Ingest & Data Retrieval: The company uses Eventstream to capture data from social media platforms like Twitter, Facebook, and Instagram. Additionally, historical customer feedback data is retrieved using the Get Data process.

Data Track & Store Streaming Data: Incoming social media posts are tracked using Data Track, ensuring that customer mentions are captured effectively. The data is then stored in a Streaming Data Store, which acts as a centralized repository for all social media activity.

Real-Time Dashboard & Near Real-Time Visualization: A Real-Time Dashboard presents the sentiment scores, customer mentions, and emerging topics of interest. The Near Real-Time Visualization of this data allows social media managers to immediately react to customer concerns or positive mentions.

Machine Learning Model Serving: The company utilizes an ML Model to automatically classify customer sentiments as positive, negative, or neutral. The output from the model is then used for ML Serving, which triggers personalized responses to customer posts and generates alerts for critical issues.

Reflex & Real-Time Adjustments: Using Reflex, the system can adjust marketing campaigns or trigger customer support interventions when negative sentiment is detected, ensuring that issues are addressed promptly.

Benefits of Event Analytics

Real-Time Insights: Provides the ability to see changes and trends as they happen, enabling proactive decisions.

Customer Engagement: Helps businesses engage with customers in real time, improving satisfaction and brand loyalty.

Scalable Solutions: Easily scales with increased data volume, whether it is from streaming or batch sources, to provide continuous and uninterrupted insights.

Improved Predictive Capabilities: Combining historical and real-time data allows for more accurate predictions and a better understanding of customer behavior.

Challenges of Event Analytics

Data Complexity: Managing both streaming and batch data can be complex and require specialized tools and skills.

Resource Intensive: Requires infrastructure capable of handling high-velocity data streams and storing large volumes of data.

Data Quality: Ensuring the quality of data streams in real time can be challenging, especially with noisy or incomplete data.

Conclusion

Event analytics is a powerful approach for businesses that need to integrate real-time and historical data to drive meaningful insights. By leveraging a variety of workloads and item types, event analytics can deliver a seamless data processing experience, offering both immediate insights and long-term analytical capabilities.

Whether you are a retailer trying to enhance customer engagement, a manufacturer looking to predict machine maintenance needs, or a financial services provider wanting to monitor transactions in real time, event analytics provides the tools needed to make data-driven decisions that drive success